Introduction

As a startup, you’re always designing for growth. When usage spikes, or when you onboard 3x more customers, your backend needs to be ready. One of the most overlooked levers in backend performance is bulk routing — the practice of handling multiple data items in a single request.

Bulk routes and bulk processing backends can be dramatically more efficient than one-at-a-time architectures. They reduce overhead, improve throughput, and allow systems to scale more gracefully.

While this concept applies across the stack, from APIs to message queues, it’s especially important at the data layer. In this post, we’ll explore how implementing bulk insert strategies in PostgreSQL can significantly improve write performance, and how small changes can make a big difference.

The Power of Bulk Implementation

When you’re inserting data into a database one row at a time, you’re paying a high cost:

- Each

INSERTis a network round trip. - The database must parse, plan, and execute each statement individually.

- Indexes, constraints, and WAL (write-ahead logs) are triggered for every insert.

Bulk inserts reduce this overhead by batching multiple rows into a single INSERT statement. This allows PostgreSQL to:

- Reuse the query plan across all rows.

- Apply constraints and indexes more efficiently.

- Write to disk and WAL in larger, more optimal chunks.

Bulk operations are not just faster — they often lead to simpler, more scalable backends.

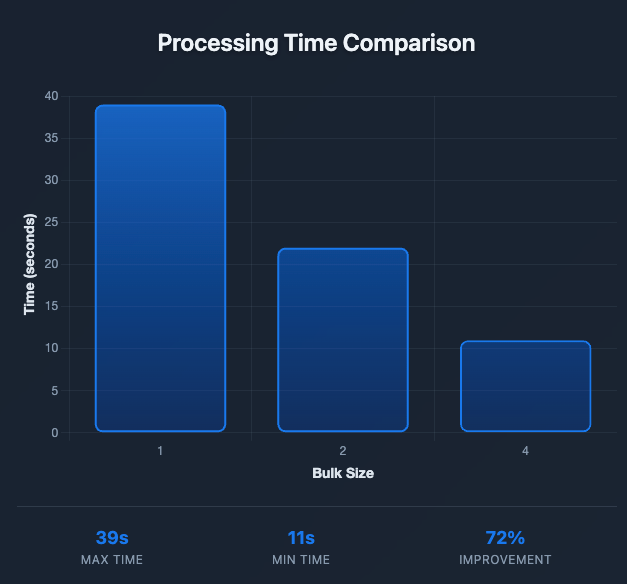

Benchmark: Bulk Size vs. Performance

To measure the impact, I inserted 300,000 rows into a PostgreSQL table with typical production-like fields (JSONB, timestamp, numeric, etc.) and 3 indexes, using different batch sizes. Here’s what I found:

Key Observations:

- Even a batch size of 2 nearly halves insert time.

- At 4 rows per batch, total time dropped by over 70%.

- This shows that even small batches provide major benefits.

Check out the full benchmark code and setup on GitHub.

Conclusion

Bulk routes and batched inserts are one of the most effective ways to improve backend throughput — especially with PostgreSQL. Even small batches can deliver massive gains with minimal code changes. The data speaks for itself.

That said, as I noted in How to Make Backend Optimization Actually Worth It, it’s always worth asking: does this improvement support your actual goals?

Performance is valuable — but only when it aligns with what matters most.